16 The Salesbot

In this chapter we continue our exploration of augmentation techniques, generalizing what we’ve learned so far to more effective and maintainable RAG approaches.

So far, we’ve used RAG in a pretty static way, in the sense that the chatbot has very little control over what gets sent to the search engine. In the basic RAG architecture we built in Chapter 14, there is zero input from the LLM when deciding the query. We simply embed the original user query and use it as key to our search engine. i.e., the vector store. In Chapter 15 we go a bit farther by letting the LLM see the original user query and provide us with alternative, hopefully more semantically relevant queries for the search engine.

However, these two approaches are still insuficient for the most interesting use cases. Imagine our search engine has an API that supports one or more arguments beyond a text query. Furthermore, suppose this API has more functions, some of which may cause side effects such as adding an object to a database or modifying some state in our application. We could desire our chatbot to be capable of determining which of these functions to call (if any) and with which parameters.

You can probably see how to extend the basic RAG approach to support multiple functions with parameters by using some elaborate prompting scheme. And this is precisely what we will do in this chapter, but instead of implementing the whole prompting on our own, we will rely on the LLM provider to do so. Before reading the remainder of this chapter, make sure to check our theoretical intro to function calling in Chapter 8.

The plan

Our goal for this chapter is to build a shoping assistant for an arbitrary (randomly generated) online store. Our bot should support three basic functions:

- Search for and suggest products.

- Add products to a shoping cart.

- Give information on the current shoping cart.

- Clear the shoping cart.

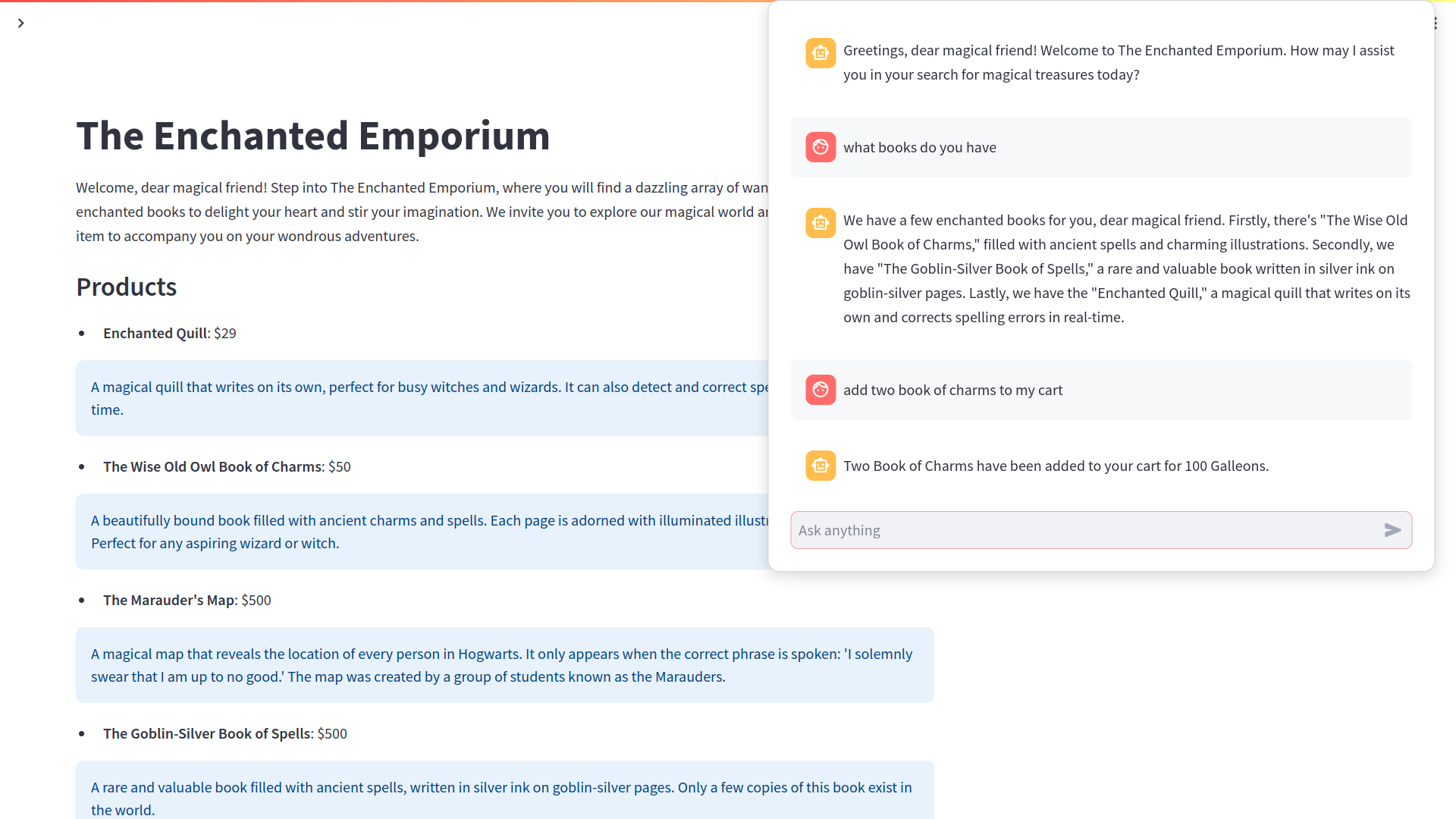

Here is a screenshot:

A typical interaction between a user and our bot can go as follows:

- The user asks for information about some products or needs.

- The chatbot invokes an API function to search for relevant products related to the user query.

- The search API provides a list of relevant products.

- The chatbot replies back to the user with an elaborated response using the retrieved information.

- The user asks for some of these products to be added to the cart.

- The bot identifies the right product and quantity and invokes the API function to modify the cart.

- And so on…

To implement this functionality we need to solve the following problems:

- How to determine when to call an API function, which function, and with which parameters.

- How to allow the chatbot invoke the corresponding function, and inject the results of the function call back in to the chatbot conversation.

- How to implement the specific functions needed in this example.

Let’s tackle them one at a time.

Function calling from scratch

As we saw in Chapter 8, the traditional approach to function calling involves building a detailed prompt that includes definitions for the available functions, their arguments, and suitable descriptions that help the LLM determine the best function for a given context. Since this process is repetitive and error-prone, most LLM provides have a dedicated API for function calling, enabling developers to submit a structured schema for the function definitions.